Raw Green Rust: Improvisation (with FluCoMa and UniSSON)

Keywords: Computer Music, Machine Listening, Signal Decomposition, Audiovisual Performance.

Raw Green Rust make abstract electronic music informed by wide-ranging musical tastes, using custom software instruments and processes with gestural controllers (Rawlinson, Green and Murray-Rust 2018, 2019). An important aspect of our improvising approach is to constantly sample and transform each other, in pursuit of an organic, shifting sound mass. Our performance practice builds on existing strands of work in creative computing, computer music and musicology but seeks to make playful and agile use of technology to explore shared musical agency.

We conceive of our work in ecosystemic terms (Waters 2007, Green 2011). Mutual connectivity through networked audio and control data offers radical possibilities for making sonic outcomes that are fluid, shared and responsive through performative agency that is distributed across people and processes. Performative agency in software can be found in the software’s capacity to act as a conduit and focus of interaction and exchange, as an object that can influence or change behaviours (Bown, Eldridge and McCormack 2009). For Raw Green Rust, this can be found in applications of the FluCoMa and UniSSON toolsets, alongside our already well-established plunderphonic aesthetic. [1]

Fluid Corpus Manipulation

Fluid Corpus Manipulation (FluCoMA) [2] is a five-year ERC-funded project, focusing on musical practices that work with collections of recorded audio and machine listening technologies. The project will produce software toolkits, learning resources and a community platform, in the hope of facilitating distinctively artistic and divergent approaches to researching machine listening (Green, Tremblay and Roma 2018).

In the context of this performance, the focus is on how techniques such as audio novelty measurement, dimensionality reduction and clustering can be used to facilitate live corpus co-creation between the members of the group. What effects might this have on the timescales over which we can quote and transform each other’s gestures?

Unity Supercollider Sound Object Notation

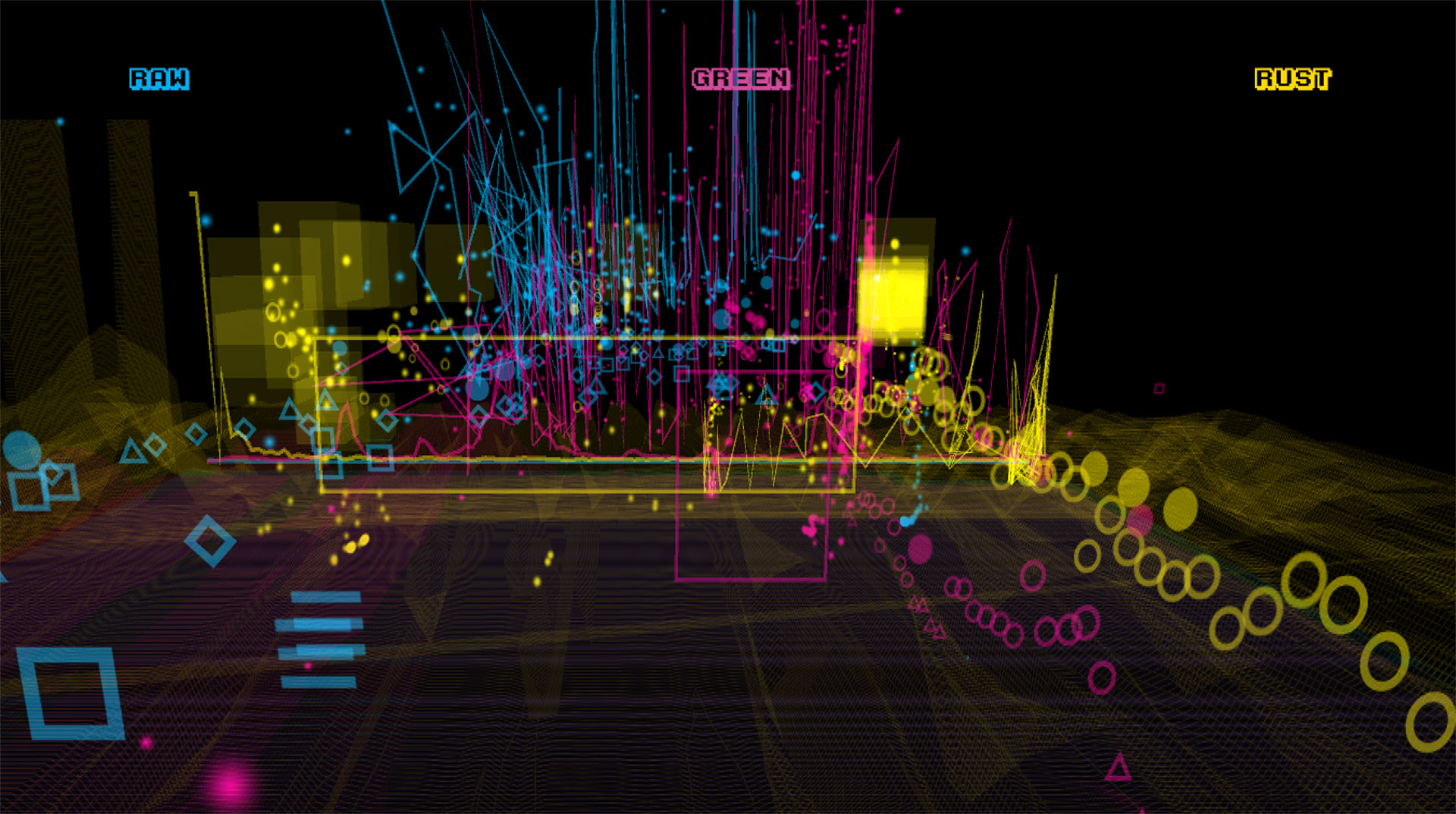

The sound-symbol relationship is the main focus of the Unity Supercollider Sound Object Notation project (UniSSON) [3] (Rawlinson and Pietruszewksi 2019).

In electronic music, especially by groups, it can be hard for audiences and performers to know who is doing what as movement and action is decoupled from sonic results. If the audience and performers are not able to audibly or visibly (at a gestural level) perceive contribution, how might it otherwise be represented and communicated?

The main output from this research is a suite of software tools that presents a real-time multi-temporal and multi-resolution view of sonic data. The current state of the software gives a clear view of which audio features belongs to which player, and indicates relationships between events/streams and gestures while exploring legibility and co-agency in laptop performance.

Acknowledgements

FluCoMa has received funding from the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation programme (grant agreement No 725899).

Notes

- A Raw Green Rust performance can be heard at rawgreenrust.bandcamp.com.

- For more information see www.flucoma.org.

- For more information see www.pixelmechanics.com/unisson.

References

- Bown, O, Eldridge, A, & McCormack, J. 2009. “Understanding Interaction in Contemporary Digital Music: from instruments to behavioural objects.” Organised Sound, vol. 14, no. 2, pp. 188–196.

- Green, O. 2011. “Agility and Playfulness: Technology and skill in the performance ecosystem.” Organised Sound, vol. 16, no. 2, pp. 134–144.

- Green, O, Tremblay, PA, & Roma, G. 2018. Interdisciplinary Research as Musical Experimentation: A case study in musicianly approaches to sound corpora. Electroacoustic Music Studies Network Conference 2018.

- Rawlinson, J, Green, O & Murray-Rust, D. 2018. Raw Green Rust: Laptop Trio. Sonorities Festival, Belfast.

- Rawlinson, J, Green, O & Murray-Rust, D. 2019. Raw Green Rust: Improvisation with machine-learning and visualization. Beyond Festival, Heilbronn.

- Rawlinson, J, & Pietruszewski, M. 2019. Visualizing co-agency and aiding legibility of sonic gestures for performers and audiences of multiplayer digital improvisation with UniSSON (Unity Supercollider Sound Object Notation). Convergence International Conference/Festival of Music, Technology and Ideas 2019.

- Waters, S. 2007. Performance Ecosystems: Ecological Approaches to Musical Interaction. Proceedings of the Electroacoustic Music Studies Network Conference 2007.

Join the conversation

xCoAx 2020: @jules_rawlinson, @weefuzzy, @davemurrayrust “Raw Green Rust: Improvisation”. A laptop trio that exploits the interconnectedness of its constituent members & technical ecosystems in playful and agile ways. https://t.co/xs6iCXoDFc #xCoAx2020 @flucoma pic.twitter.com/bpQpgVbERM

— xcoax.org (@xcoaxorg) July 8, 2020